Chapter 2. Architecture and technical overview

63

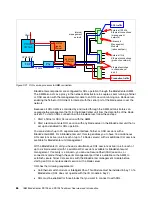

top-of-rack switch. A combination of the appropriate I/O switch module in these bays and the

proper Fibre Channel-capable modules in bays 3 and 5 can eliminate the top-of-rack switch

requirement. See 1.7, “Supported BladeCenter I/O modules” on page 28.

InfiniBand Host Channel adapter

The InfiniBand Architecture (IBA) is an industry-standard architecture for server I/O and

interserver communication. It was developed by the InfiniBand Trade Association (IBTA) to

provide the levels of reliability, availability, performance, and scalability necessary for present

and future server systems with levels significantly better than can be achieved using

bus-oriented I/O structures.

InfiniBand is an open set of interconnected standards and specifications. The main InfiniBand

specification has been published by the InfiniBand Trade Association and is available at the

following web page:

http://www.infinibandta.org/

InfiniBand is based on a switched fabric architecture of serial point-to-point links. These

InfiniBand links can be connected to either host channel adapters (HCAs), used primarily in

servers, or target channel adapters (TCAs), used primarily in storage subsystems.

The InfiniBand physical connection consists of multiple byte lanes. Each individual byte lane

is a four-wire, 2.5, 5.0, or 10.0 Gbps bi-directional connection. Combinations of link width and

byte lane speed allow for overall link speeds of 2.5 - 120 Gbps. The architecture defines a

layered hardware protocol as well as a software layer to manage initialization and the

communication between devices. Each link can support multiple transport services for

reliability and multiple prioritized virtual communication channels.

For more information about InfiniBand, read HPC Clusters Using InfiniBand on IBM Power

Systems Servers, SG24-7767, available from the following web page:

http://www.redbooks.ibm.com/abstracts/sg247767.html

The 4X InfiniBand QDR Expansion Card is a 2-port CFFh form factor card and is only

supported in a BladeCenter H chassis. The two ports are connected to the BladeCenter H I/O

switch bays 7 and 8, and 9 and 10.

A supported InfiniBand switch is installed in the switch

bays to route the traffic either between blade servers internal to the chassis or externally to an

InfiniBand fabric

.

2.7.4 Embedded SAS Controller

The embedded 3 Gb SAS controller is connected to one of the Gen1 PCIe x8 buses on the

GX++ multifunctional host bridge chip. The PS704 uses a single embedded SAS controller

located on the SMP expansion blade.

More information about the SAS I/O subsystem can be found in 2.9, “Internal storage” on

page 68.

2.7.5 Embedded Ethernet Controller

The Broadcom 2-port BCM5709S network controller has its own Gen1 x4 connection to the

GX++ multifunctional host bridge chip. The WOL, TOE, iSCSI, and RDMA functions of the

BCM5709S are not implemented on the PS703 and PS704 blades.

The connections are routed through the 5-port Broadcom BCM5387 Ethernet switch ports 3

and 4. Then port 0 and port 1 of the BCM5387 connect to the Bladecenter chassis. Port 2 of

Summary of Contents for BladeCenter PS703

Page 2: ......

Page 8: ...vi IBM BladeCenter PS703 and PS704 Technical Overview and Introduction...

Page 14: ...xii IBM BladeCenter PS703 and PS704 Technical Overview and Introduction...

Page 50: ...36 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction...

Page 164: ...150 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction...

Page 197: ......